Hi everyone-

Well, September certainly seemed to disappear pretty rapidly (along with the sunshine sadly). And dramatic events keep accumulating, from the sad death of the Queen, together with epic coverage of ‘the queue‘, to dramatic counter offensives in the Ukraine, to unprecedented IMF criticism of the UK government’s tax-cutting plans. Perhaps time for a breather, with a wrap up data science developments in the last month.

Following is the October edition of our Royal Statistical Society Data Science and AI Section newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. (If you are reading this on email and it is not formatting well, try viewing online at http://datasciencesection.org/)

As always- any and all feedback most welcome! If you like these, do please send on to your friends- we are looking to build a strong community of data science practitioners. And if you are not signed up to receive these automatically you can do so here.

Industrial Strength Data Science October 2022 Newsletter

RSS Data Science Section

Committee Activities

The RSS 2022 Conference, held on 12-15 September in Aberdeen was a great success. The Data Science and AI Section’s session ‘The secret sauce of open source’ was undoubtedly a highlight (we are clearly biased!) but all in all lots of relevant, enlightening and entertaining talks for a practicing data scientist. See David Hoyle’s commentary here (also highlighted in the Members section below).

Following hot on the heels of our July meetup, ‘From Paper to Pitch‘ we were very pleased with our latest event, “IP Freely, making algorithms pay – Intellectual property in Data Science and AI” which was held on Wednesday 21 September 2022. A lively and engaging discussion was held including leading figures such as Dr David Barber (Director of the UCL Centre for Artificial Intelligence ) and Professor Noam Shemtov (Intellectual Property and Technology Law at Queen Mary’s University London).

The AI Standards Hub, an initiative that we reported on earlier this year, led by committee member Florian Ostmann, will see its official launch on 12 October. Part of the National AI Strategy, the Hub’s new online platform and activities will be dedicated to knowledge sharing, community building, strategic research, and international engagement around standardisation for AI technologies. The launch event will be livestreamed online and feature presentations and interactive discussions with senior government representatives, the Hub’s partner organisations, and key stakeholders. To join the livestream, please register before 10 October using this link (https://tinyurl.com/AIStandardsHub).

Martin Goodson (CEO and Chief Scientist at Evolution AI) continues to run the excellent London Machine Learning meetup and is very active with events. The next event is on October 12th when Aditya Ramesh, Researcher at OpenAI, will discuss (the very topical) “Manipulating Images with DALL-E 2“. Videos are posted on the meetup youtube channel – and future events will be posted here.

This Month in Data Science

Lots of exciting data science going on, as always!

Ethics and more ethics…

Bias, ethics and diversity continue to be hot topics in data science…

- Sadly, we continue to see ethically and morally suspect uses of data and AI. And the potential consequences rise as the capabilities improve and use becomes more widespread.

- Research at the Univeristy of Toronto uncovers that “since June 2016 China’s police have conducted a mass DNA collection program in Tibet”.

- A Federal Judge in the US ruled that “scanning students’ rooms during remote tests is unconstitutional”

- While Facebook’s development of a technique to “decode speech from brain activity” is impressive (also here) it definitely feels a bit dystopian…

- We are still finding issues with the underlying data that drives many of the core foundation models – here it’s misclassifying bugs! Also how much personal information is hidden away and encoded in the models given the vast quantities of data used in training – really interesting piece from MIT technology review showing how GPT-3 can identify an individual.

- And lots of commentary about the new image generation tools like DALLE and Stable Diffusion (more on these below) and their impact on creativity and ethics… making deep fakes easy, winning art prizes … good general summary from Axios here.

- Meanwhile leading researchers reckon there is lots more to come such as interactive and compositional deep fakes

"Interactive deepfakes have the capability to impersonate people with realistic interactive behaviors, taking advantage of advances in multimodal interaction. Compositional deepfakes leverage synthetic content in larger disinformation plans that integrate sets of deepfakes over time with observed, expected, and engineered world events to create persuasive synthetic histories"- So what can be done? As we have discussed previously, there is increasing momentum around some sort of regulation and legal framework to govern AI usage

- In the UK, there are now legal rules around liability with self-driving vehicles

- The EU is attempting broader steps with a wide reaching ‘AI Act’ attempting to regulate General Purpose AI…. which is generating heated discussion.

- It does look like the EU will be in the vanguard of this regulatory approach, which then has implications for the global AI market

"We argue that the upcoming regulation might be particularly important in offering the first and most influential operationalisation of what it means to develop and deploy trustworthy or human-centred AI. If the EU regime is likely to see significant diffusion, ensuring it is well-designed becomes a matter of global importance.."- But regulation is far from the only approach. Many leading researchers advocate for maintaining ‘Human-in-the-loop’ approaches where outcomes are sensitive

- Advocating for and maintaining a strong open-source community so that the capabilities are not controlled by large corporations is another excellent avenue – and Facebook/Meta’s move of PyTorch into its own independent foundation is a good step.

- Implementing transparent ‘best-practices’ in model development and deployment is something we should all being doing as responsible data scientists. Good summary here of why this is important, and an excellent Best Practices for ML Engineering here (also here with video)… definitely worth a detailed read!

"Most of the problems you will face are, in fact, engineering problems. Even with all the resources of a great machine learning expert, most of the gains come from great features, not great machine learning algorithms. So, the basic approach is:

1. make sure your pipeline is solid end to end

2. start with a reasonable objective

3. add commonsense features in a simple way

4. make sure that your pipeline stays solid.

This approach will make lots of money and/or make lots of people happy for a long period of time. Diverge from this approach only when there are no more simple tricks to get you any farther. Adding complexity slows future releases."- Finally, we can also try and build ‘fairness’ into the underling algorithms, and machine learning approaches. For instance, this looks to be an excellent idea – FairGBM

"FairGBM is an easy-to-use and lightweight fairness-aware ML algorithm with state-of-the-art performance on tabular datasets.

FairGBM builds upon the popular LightGBM algorithm and adds customizable constraints for group-wise fairness (e.g., equal opportunity, predictive equality) and other global goals (e.g., specific Recall or FPR prediction targets)."Developments in Data Science Research…

As always, lots of new developments on the research front and plenty of arXiv papers to read…

- Before delving into this month’s array of arxiv papers, I found this useful – it’s a guide to reading AI research papers… what to focus on based on what you are looking to accomplish.

- The implications of the ‘chinchilla’ paper (discussed last month, highlighting the ‘under training’ of current large models) are still reverberating in the research community. One outcome is an argument for a more rigorous measurement methodology, focused on extrapolated (rather than interpolated) loss.

- As always lots of research focused on making everything more efficient:

- A new approach to reduce computational complexity in Vision Transformers

- Simplifying the architecture for object detection backbones to make fine-tuning easier

- More research in using lower precision (8-bit floating point) in Deep Learning training to reduced model size

- A Seq2Seq approach proves more efficient than decoder-only models at few shot learning tasks

- ‘Simpler is better’ – elegant approach to spatiotemporal problems (like traffic prediction) using Graph Convolutional RNNs

- Finally, promising research into ‘editing’ models

"Even the largest neural networks make errors, and once-correct predictions can become invalid as the world changes. Model editors make local updates to the behavior of base (pre-trained) models to inject updated knowledge or correct undesirable behaviors"- A few more applied examples this month:

- Innovative- casting audio generation as a language modelling task – AudioLM

- Applying self-supervision techniques to medical image segmentation

- Applying diffusion models to generate brain images

- Mapping molecular structure to smells with graph neural networks

- Reviewing an ‘old’ approach… now we have more compute! – Sparse Expert Models

- Promising approach for explainability with tree based models – Robust Counterfactual Explanations

- Intriguing – Emergent Abilities of Large Language Models

"We consider an ability to be emergent if it is not present in smaller models but is present in larger models. Thus, emergent abilities cannot be predicted simply by extrapolating the performance of smaller models. The existence of such emergence implies that additional scaling could further expand the range of capabilities of language models"- DeepMind have released Menagerie – “a collection of high-quality models for the MuJoCo physics engine”: looks very useful for anyone working with physics simulators

- Finally, another great stride for the open source community this time from LAION – a large scale open source version of CLIP (a key component of image generation models that computes representations of images and texts to measure similarity)

We replicated the results from openai CLIP in models of different sizes, then trained bigger models. The full evaluation suite on 39 datasets (vtab+) are available in this results notebook and show consistent improvements over all datasets.Stable-Dal-Gen oh my…

Lots of discussion about the new breed of text-to-image models (type in a text prompt/description and an -often amazing- image is generated) with three main models available right now: DALLE2 from OpenAI, Imagen from Google and the open source Stable-Diffusion from stability.ai.

- Ok, so what’s it all about- probably the quickest way to get a feel for it is to sign up for free here and play around in the browser.

- Why is it a big deal? Well, its technically very impressive… but it has the business/investment community excited about potential applications…

- Here is the venerable Sequoia Capital’s take on “Generative AI”

- And capabilities keep improving- DALLE now has “out-painting”

- And lots of additional commentary on the potential, from improved creative processes to enhanced compression!

- Even a whole new industry centred on ‘better prompting’, with reddit discussions and even ‘prompt-books‘

- If you want to get a bit more ‘under the hood’, there are an ever growing number of notebooks and simple implementation guides for running in the cloud or even locally (e.g command line, in jupyterlab, in colab…)

- If you want to dig into the details of how these models work, then there are some good survey papers out there specifically on diffusion models (generating the images – here and here) as well as multi-modal machine learning in general

- And then there are various tutorials/how to guides that talk through the process in various levels of abstraction, from a very high level, to ‘building your own Imagen‘, to ‘the fundamentals of generative modelling‘ to FastAI’s ‘soup-to-nuts’ course

- Finally, Karpathy has an implementation of GPT (large language model) on it’s own which is well worth exploring in it’s own right (as are his youtube tutorials…and if you end up tweaking/building your own, hugging face lets you quickly evaluate the model)

"minGPT tries to be small, clean, interpretable and educational, as most of the currently available GPT model implementations can a bit sprawling. GPT is not a complicated model and this implementation is appropriately about 300 lines of code (see mingpt/model.py). All that's going on is that a sequence of indices feeds into a Transformer, and a probability distribution over the next index in the sequence comes out. The majority of the complexity is just being clever with batching (both across examples and over sequence length) for efficiency."Real world applications of Data Science

Lots of practical examples making a difference in the real world this month!

- Bio and healthcare applications…

- More great work building off DeepMind’s AlphaFold- Covid vaccine made from a novel protein

- AI guided fish harvesting!

- Pretty pictures! Bird migration forecast maps

- The world of business…

- Powerful and practical work from the team at ASOS – optimising markdown in online fashion

- Smarter paywalls at the NY Times with machine learning

- Less data science more data engineering and analytics, but impressive nonetheless: realtime analytics at Uber Freight

- Netflix using machine learning to help guide content creation

- Following on from last month, the next instalment of causal forecasting at Lyft

- Multi-Objective ranking at Ebay

- Score one for the tax man! “AI detects 20,000 hidden taxable swimming pools in France, netting €10m“

- An efficient approach to finding pictures in images from the team at FunCorp

- Latest enhancements in Google Search, leveraging their Multitask Unified Model – impressive

"By using our latest AI model, Multitask Unified Model (MUM), our systems can now understand the notion of consensus, which is when multiple high-quality sources on the web all agree on the same fact. Our systems can check snippet callouts (the word or words called out above the featured snippet in a larger font) against other high-quality sources on the web, to see if there’s a general consensus for that callout, even if sources use different words or concepts to describe the same thing. We've found that this consensus-based technique has meaningfully improved the quality and helpfulness of featured snippet callouts."- Language…

- Zero-shot multi-modal reasoning with language … what looks to be a powerful approach to bringing multiple pre-trained models together

- More groundbreaking work from OpenAI – this time with automatic speech recognition (Whisper)

- It turns out foundation models like GPT3 can ‘wrangle your data’ and potentially ‘talk causality’

- More great language progress – learning the rules and patterns of language on its own

“One of the motivations of this work was our desire to study systems that learn models of datasets that is represented in a way that humans can understand. Instead of learning weights, can the model learn expressions or rules? And we wanted to see if we could build this system so it would learn on a whole battery of interrelated datasets, to make the system learn a little bit about how to better model each one"- Robots!

- Driverless autonomous vehicles now live in Shenzen

- Teaching a robot precise football shooting skills with reinforcement learning

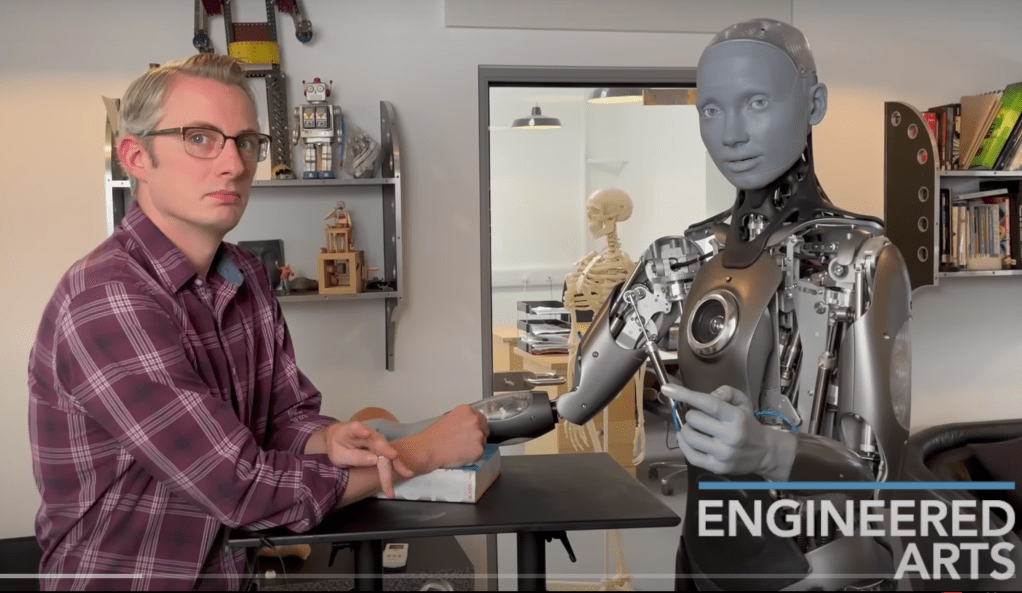

- Finally…pretty spooky – conversation with a GPT-3 powered robot, complete with facial expressions and movement

How does that work?

Tutorials and deep dives on different approaches and techniques

- Starting with a bang… recreating DeepMind’s AlphaZero in 5 short video tutorials!

- What is vector search and why is is so fast?

- Getting a bit more ‘mathsy’ – momentum and its importance to gradient descent

- And a couple more tutorials on Causal Inference , counterfactuals, and implications for supervised learning

- Close to my heart (!) – “A Short Chronology of Deep Learning for Tabular Data“

"Deep learning is sometimes referred to as “representation learning” because its strength is the ability to learn the feature extraction pipeline. Most tabular datasets already represent (typically manually) extracted features, so there shouldn’t be a significant advantage using deep learning on these."- An under-discussed topic (how best to use the outputs from ML models)- making decisions with classifiers – well worth a read

- A couple of excellent algorithm tutorials from strikingloo

- Finally, a bit of fun:

- Automatic differentiation in 26 lines of python

- The realty of celebratory gunfire… (I know you’re curious…)

Practical tips

How to drive analytics and ML into production

- Continuing on from last time, more on ML Ops….

- A survey paper on what people are actually using in production- really interesting

- Databricks’ increasingly coherent view – also a quick and easy tutorial taking MLFlow for a spin

- Guild – a new (another…) open source framework for model tracking and measurement

- Large Language Models are a bit different from your run-of-the-mill binary classifier so interesting to hear how they are productionised

- Useful step-by-step guide to serverless asynchronous data ingestion

- Always interesting to hear how different companies do things:

- Good post encouraging data centric thinking for ML- proactively collect the data you really need

- Finally, some useful pointers on visualisation

- A useful 12 point checklist

- A quick ‘python cookbook‘ for plotting with various libraries

- Which fonts to use!

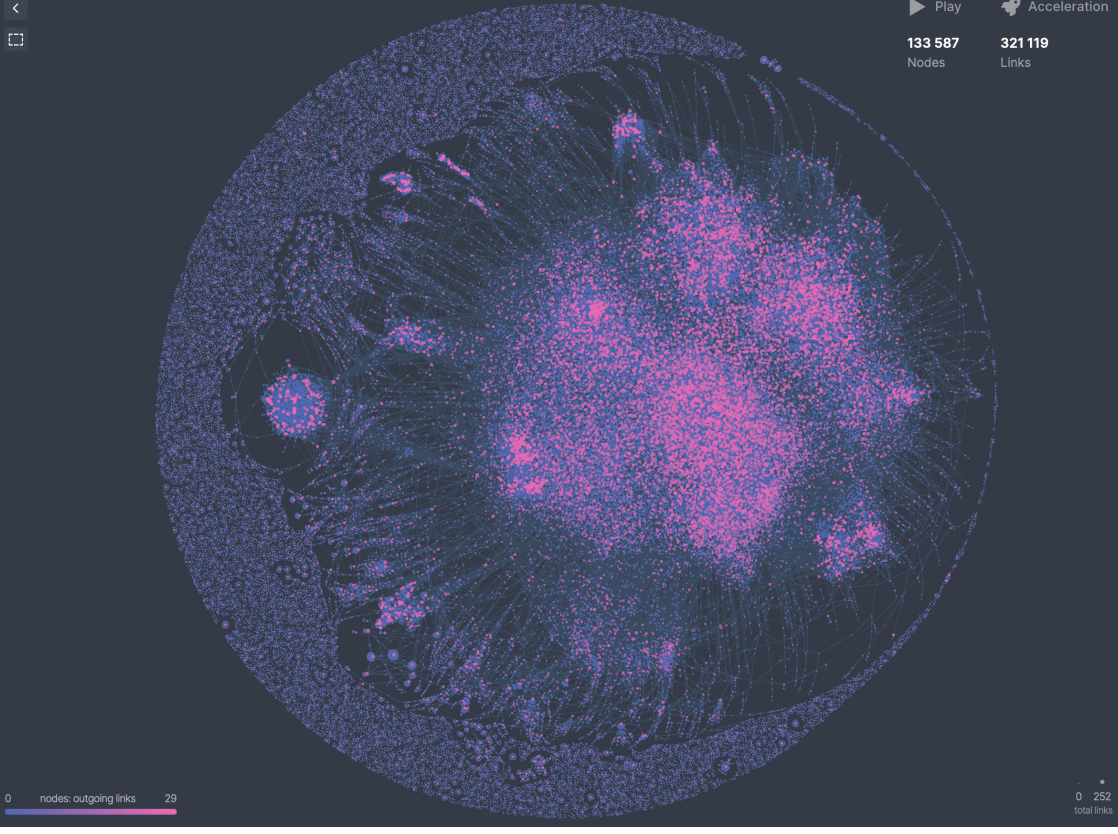

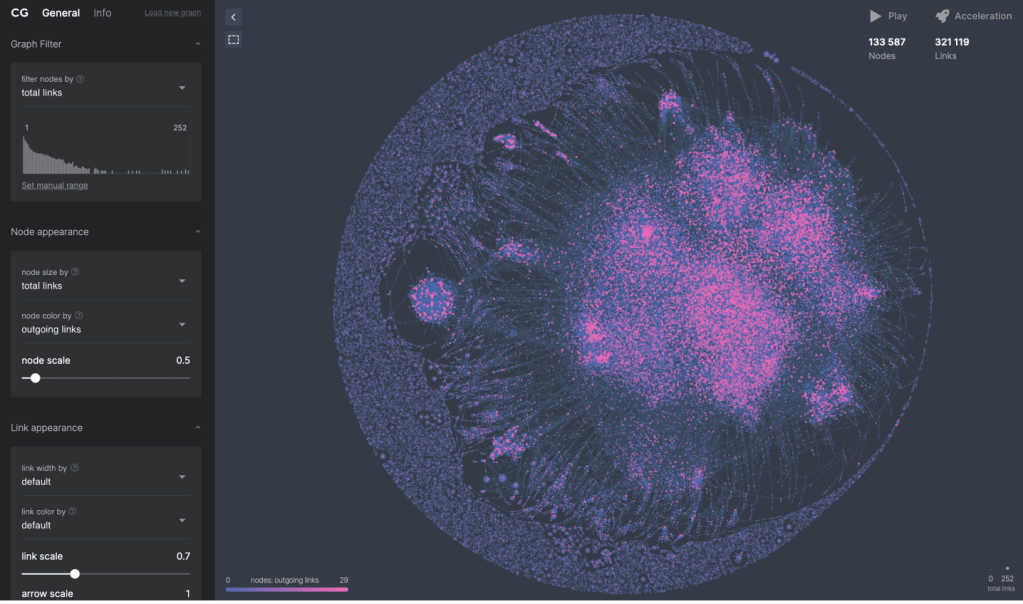

- And how to plot a million node graph…

Bigger picture ideas

Longer thought provoking reads – lean back and pour a drink! …

“We’re not trying to re-create the brain,” said David Ha, a computer scientist at Google Brain who also works on transformer models. “But can we create a mechanism that can do what the brain does?”"A common finding is that with the right representation, the problem becomes much easier. However, how to train the neural network to learn useful representations is still poorly understood. Here, causality can help. In causal representation learning, the problem of representation learning is framed as finding the causal variables, as well as the causal relations between them..""As we’ve seen, the nature of algorithms requires new types of tradeoff, both at the micro-decision level, and also at the algorithm level. A critical role for leaders is to navigate these tradeoffs, both when the algorithm is designed, but also on an ongoing basis. Improving algorithms is increasingly a matter of changing rules or parameters in software, more like tuning the knobs on a graphic equalizer than rearchitecting a physical plant or deploying a new IT system"- Gödel’s Incompleteness Theorem And Its Implications For Artificial Intelligence – Daniel Sabinasz (stiff drink time!)

"Lucas concludes his essay by stating that the characteristic attribute of human minds is the ability to step outside the system. Minds, he argues, are not constrained to operate within a single formal system, but rather they can switch between systems, reason about a system, reason about the fact that they reason about a system, etc. Machines, on the other hand, are constrained to operate within a single formal system that they could not escape. Thus, he argues, it is this ability that makes human minds inherently different from machines."Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

- Explore the Marvel Cinematic Universe like never before!

- AI Generated Bible Art…

- Elegant visualisations of polar climate change…

- “A Proposal For The Dartmouth Summer Research Project On Artificial Intelligence“… dated August 31st 1955! Check the authors…

- Feeling inspired?…. apply for the AI Grant!

Covid Corner

Apparently Covid is over – certainly there are very limited restrictions in the UK now

- The latest results from the ONS tracking study estimate 1 in 65 people in England have Covid- definitely better than last month (1 in 45) but sadly starting to go in the wrong direction (was 1 in 75 two weeks ago)… and till a far cry from the 1 in 1000 we had last summer.

- The UK has approved the Moderna ‘Dual Strain’ vaccine which protects against original strains of Covid and Omicron.

Updates from Members and Contributors

- David Hoyle has published an excellent review of the recent RSS conference, highlighting the increasing relevance to practicing Data Scientists- well worth a read

- The ONS are keen to highlight the last of this year’s ONS – UNECE Machine Learning Groups Coffee and Coding session on 2 November 2022 at 1400 – 1530 (CEST) / 0900 – 1030 (EST) when Tabitha Williams and Brittny Vongdara from Statistics Canada will provide an interactive lesson on using GitHub, and an introduction to Git. For more information and to register, please visit the Eventbrite page (Coffee and Coding Session 2 November). Any questions, get in touch at ML2022@ons.gov.uk

Jobs!

The Job market is a bit quiet over the summer- let us know if you have any openings you’d like to advertise

- EvolutionAI, are looking to hire someone for applied deep learning research. Must like a challenge. Any background but needs to know how to do research properly. Remote. Apply here

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here.

– Piers

The views expressed are our own and do not necessarily represent those of the RSS